Using continuous integration to automate incident response and malware analysis

Writer: Ville Kalliokoski

While incident response and malware analysis has to be done largely on a case by case basis, a sizable portion of threats are fairly similar and the workflow of those cases have enough overlap to automate at least parts of it. Luckily software engineering has a long history with automating similar tasks, and there is a large ecosystem of testing and version management systems capable of doing the repetitive and time consuming parts of the analysis.

Using these tools also provide other benefits than just a more streamlined workflow. When automatic analysis gains new capabilities and you already have a repository of samples, you can run these new capabilities against your samples and maybe gain new insight to what you missed on the previous runs. Even a single analysis can benefit from the repeatability and collaboration possibilities offered by recording the analysis workflow in a version controlled, transparent workflow.

Here’s how we do it.

The pipeline

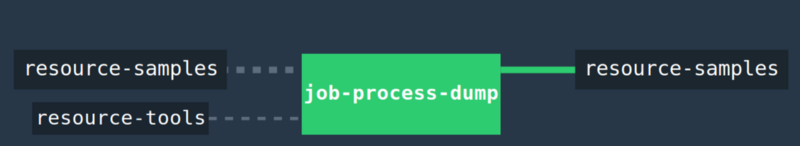

The current prototype is built using Docker, Git, Concourse CI and Volatility. Concourse is a continuous integration system that aims to be simple to understand and manage while providing all the necessary capabilities for automating testing, integration and deployment. Volatility is an open source memory forensics framework used for static analysis of memory dumps.

When Concourse notices new files in the sample repository, the pipeline starts with pulling the samples and scripts to server. After that a shell script starts a Docker container containing Volatility, deposits the samples and tool scripts in the base image and starts the toolchain.

General view of the pipeline in the Concourse GUI

General view of the pipeline in the Concourse GUI

Currently the pipeline finds out the memory profile of the sample, recognizes hidden processes in the memory sample and exports them as executables to an output folder. After that the executables, along with the text output of Volatility in a text file, are pushed back to sample repository.

Setting up the pipeline

After setting up the Concourse server, it is rather straightforward to use it to run the Volatility analyzers automatically on memory samples stored in a repository and push the results back there.

#!/bin/sh

samples="resource-samples/samples/*"

output="resource-dumped-processes/dump"

toolscript="resource-tools/python-tools/volatility_hiddenprocessdump.py"

for filename in $samples

do

python $toolscript $filename $output

done

A simplified shell script used in Concourse to run the analyzers

Above is a simplified shell script used to run a Python script. Scripts used to run the analyzers and the pipeline are stored in another repository. The python script is similarly simple, as it is just a collection of functions that automate the Volatility plugins needed for the analysis.

def dump_process(sample, output_path, pID, offset, profile):

""" Dump executable of a process running in memory sample

Keyword arguments:

sample -- filename of the memory sample

output_path -- output file path

pID -- process ID of the process in sample

offset -- memory offset of the process

profile -- type of system, e.g. WinXPSP2x86

"""

arguments = ["vol.py", "procdump", "-D", output_path, "-f", sample,

"-p", pID, "--offset=" + offset, "--profile=" + profile]

dump = subprocess.Popen(arguments, universal_newlines=True)

dump.wait()

A sample function from the Python script used to run Volatility plugins

Volatility is Python based, so it could also be used as a library, but for the sake of portability we opted to use it running subprocesses.

You can find the complete scripts and configurations in this repository.

Going forward

While this prototype currently only works for a specific use case, it already provides the basic infrastructure we will need in the future. The next step in the project is to gather more general use cases and implement more pipelines for those, as well as work on making the framework more adjustable and flexible. The long-term goal is also to make this configurable so that users can connect different pipelines and tools more easily for different needs.

We are currently collecting data on the incident response tool usage among infosec professionals so we can integrate the tools the end users are really using. We would really appreciate your time in completing our survey.

About the CinCan project

The aim of the project is to provide tools for automating incident response and malware analysis toolchain. Our current plan for the automation is to use software development tools like CI pipelines and version management systems to provide an infrastructure for it. Our goal is a flexible, easy to use toolset that can be applied and modified to the needs of the user.

For more information about this project see our GitLab. You can find complete scripts and configurations of this pipeline from here.

Original blog post: https://medium.com/ouspg/using-continuous-integration-to-automate-incident-response-and-malware-analysis-52cd9cfa1865